What is Application Modernization?

In today’s fast-paced market enterprises must evolve to stay competitive. Many enterprises today struggle with outdated legacy systems and architectures that impact their ability to harness the latest technological advancements.

What Is a Managed Security Service Provider (MSSP)?

As businesses face increasing threats of cyber-attacks, many are turning to Managed Security Service Providers (MSSPs) to safeguard their digital assets.

Solving the Biggest IT Challenges in the Manufacturing Sector

The integration of new technologies like artificial intelligence (AI), automation and the Internet of Things (IoT) has become paramount to sustaining competitiveness and driving growth in the manufacturing sector.

The Future of Workspace Setup with ‘Branch-in-a-Box’ Solution: Importance, Benefits, and Use Cases

Growth of business today is highly dependent on agility. As business grow, the physical in-branch or in-store location or new workspaces need to come up quickly and efficiently.

Netskope and Sify Partner to Deliver Managed Sase Services With Netskope One Platform

In the rapidly evolving digital landscape, Software-Defined Wide Area Networking (SD-WAN) has emerged as a pivotal technology for enterprises looking to enhance their network infrastructure’s flexibility, agility, reliability, and security. As you consider making a critical decision for your enterprise, understanding what sets the best SD-WAN solutions and managed SD-WAN providers apart from the rest is crucial. This blog aims to guide you through the decision, helping you align your organizational needs with the capabilities offered by leading SD-WAN providers in India.

What is desktop as a service (DaaS) and how can it help your organization?

Desktop as a Service (DaaS) is becoming increasingly popular among businesses looking to enhance their IT infrastructure.

Boost SAP S/4HANA journey with Sify & LeapGreat’s Innovative Solution

Meet with us at Sapphire 2024 and learn how to accelerate Your SAP S/4HANA Journey with the power of automation with Sify & LeapGreat’s Innovative Solution

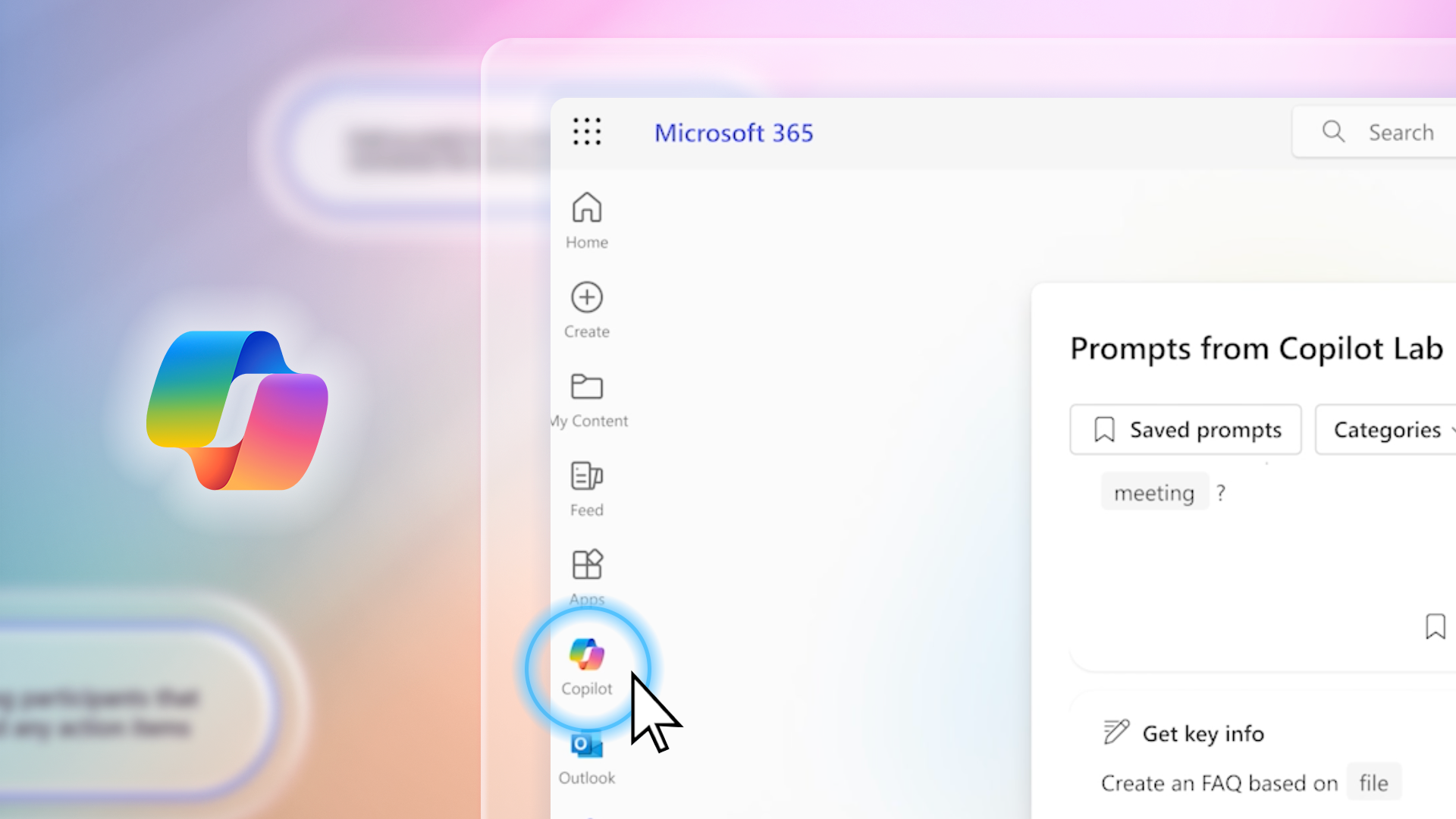

Getting Your Nonprofit Organisation Ready to Implement Microsoft Copilot

As a nonprofit professional, you’re constantly seeking ways to maximise productivity and efficiency to further your mission.

What is hyperscale cloud?

Robust and reliable data centers are pivotal infrastructural backbones in ensuring the seamless operation of internet-based services, cloud computing, and end-to-end management of vast amounts of data.

Modern Digital Asset Management(DAM): A Strategic Imperative for Brands to Achieve Success

In today’s digital first world, consumers expect personalized, relevant, and engaging content at every interaction. Brands today are channelling substantial investments into creating compelling content to enhance engagement and drive conversions. Astonishingly, despite these significant investments, many brands find themselves with limited visibility into the performance of their content across diverse channels and campaigns.