Cloud Service Models Compared: IaaS, PaaS & SaaS

Cloud computing has been dominating the business discussions across the world as it is consumed by the whole business ecosystem and serves both small and large enterprises. Companies are faced with a choice between three predominant models of cloud deployment when adopting the technology for their business. A company may select from SaaS, PaaS, and IaaS models based on their needs and the capabilities of cloud service models. Each model has inherent advantages and characteristics.

SaaS (Software as A Service)

SaaS service models have captured the largest share in the cloud world. In SaaS, third-party service providers deliver applications while the access to them is granted to a client through a Web interface. The cloud service provider manages everything including hardware, data, networking, runtime, data, middleware, operating systems, and applications. Some SaaS services that are popular in the business world are Salesforce, GoToMeeting, Dropbox, Google Apps, and Cisco WebEx.

Service Delivery: A SaaS application is made available over the Web and can be installed on-premise or can be executed right from the browser, depending upon the application. As opposed to traditional software, SaaS-based software is delivered predominantly in subscription-based pricing. While popular end-user applications such as Dropbox and MS Office Apps offer a free trial for a limited period, their extended usage, integrations, and customer support could come at a nominal subscription cost.

How to identify if it is SaaS? If everything is being managed from a centralized location on a cloud platform by your service provider, and your application is hosted on a remote server to which you are given the access through Web-based connectivity, then it is likely to be SaaS.

Benefits: The cost of licensing is less in this model, and it also provides a mobility advantage to the workforce as the applications can be accessed from anywhere using the Web2. In this model, everything at the back-end is taken care of by the service provider while the client can use the features of specific applications. If there are any technical issues faced in infrastructure, the client can depend on the service provider to remove them.

When to Choose? You can choose this model if you do not want to take the burden of managing your IT infrastructure as well as the platform, and only want to focus on the respective application and services. You can pass on the laborious work of installation, upgrading, and management to the third-party companies that have expertise in public cloud management.

PaaS (Platform as A Service)

In the PaaS service model, the third-party service provider delivers software components and the framework to build applications while clients can take care of the development of the application. Such a framework allows companies to develop custom applications over the platform that is served. In this model, the service provider can manage servers, virtualization, storage, software, and networking while developers are allowed to develop customized applications. PaaS model can work with both private cloud and public cloud.

Service Delivery: A middleware is built into the model which can be used by developers. The developer does not need to do hard coding from scratch as the platform provides the libraries. This reduces the development time and enhances the productivity of an application developer enabling companies to reduce time-to-market.

How to identify if it is PaaS? If you are using integrated databases, have resources made available that can quickly scale, and you have access to many different cloud services to help you in developing, testing, and deploying applications, it is PaaS.

Benefits: The processes of development and testing are both cost-effective and fast. PaaS model delivers an operating environment and some on-demand services such as CRM, ERP, and Web conferencing. With PaaS, you can also enjoy additional microservices to enhance your run-time quality. Additional services can also be availed such as directory, workflow, security, and scheduling. Other benefits of using this service model include cross-platform development, built-in components, no licensing cost, and efficient application Lifecycle management.

When to Choose? PaaS is most suited if you want to create your application but need others to maintain the platform for you. When your developers need creative freedom to build highly customized applications and require you to provide tools for development, this would be the model to select.

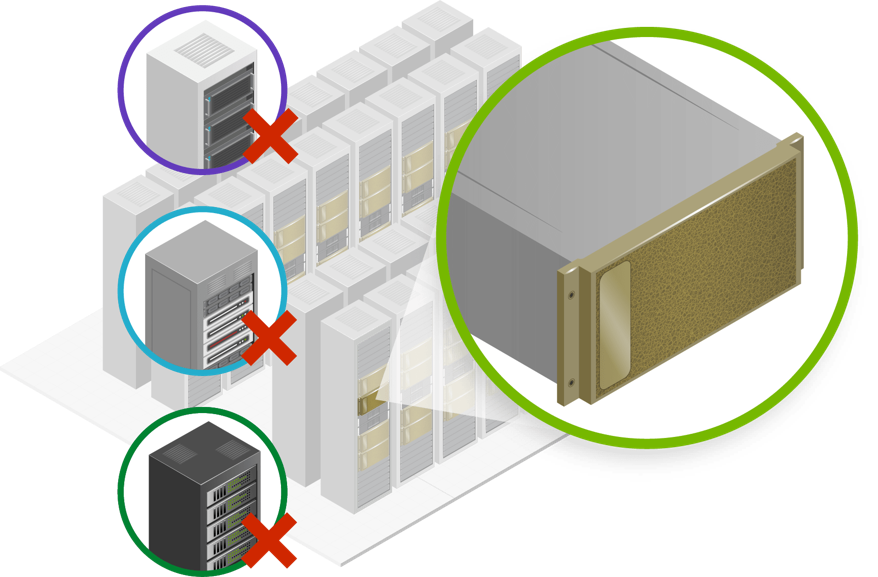

IaaS (Infrastructure as A Service)

In this cloud service model, Data Center infrastructure components are provided including servers, virtualization, storage, software, and networking. This is a pay-as-you-go model which provides access to all services that can be utilized as per your needs. IaaS is like renting space and infrastructure components from a cloud service provider using a subscription model.

Service Delivery: The infrastructure can be managed remotely by a client. On this infrastructure, companies can install their own platforms and do the development. Some popular examples of IaaS service models are Microsoft Azure, Amazon Web Services (AWS), and Google Compute Engine (GCE).

How to identify if it is IaaS? If you have all the resources available as a service, your cost of operation is relative to your consumption, and you have complete control over your infrastructure, it is IaaS.

Benefits: A company need not invest heavily in infrastructure deployment but can use virtual Data Centers. A major advantage of this service model is that with it, a single API (Application Programming Interface) can be used to access services from multiple cloud providers. A virtualized interface can be used over pre-configured hardware, and platforms can be installed by a client. IaaS service providers also give you security features for the management of your infrastructure through licensing agreements.

When to Choose? IaaS service model is most useful when you are starting a company and need hardware and software setups for your company. You may not commit to specific hardware or software but can enjoy the freedom of scaling up anytime you need with this deployment.

You can choose between the three models depending on your business needs and availability of resources to manage things. Irrespective of the model you choose, cloud Data Center does provide you a great cost advantage and flexibility with experts to back you in difficult times. Your choice of the cloud service model would affect the level of control you have over your infrastructure and applications. Depending on the needs of your business, you can select a model after a careful evaluation of the benefits of each of the cloud service models.

Sify’s many enterprise-class cloud services deliver massive scale and geographic reach with minimum investment. We help design the right solution to fit your needs and budget, with ready-to-use compute, storage and network resources to host your applications on a public, private or hybrid multi-tenant cloud infrastructure.

From Legacy to the Modern-day Data Center Cooling Systems

Modern-day Data Centers provide massive computational capabilities while having a smaller footprint. This poses a significant challenge for keeping the Data Center cool, since more transistors in computer chips, means more heat dissipation, which requires greater cooling. Thereby, it has come to a point where traditional cooling systems are no longer adequate for modern Data Center cooling.

Legacy Cooling: Most Data Centers still use legacy cooling systems. They use raised floors to deliver cold air to Data Center servers, and this comes from Computer Room Air Conditioner (CRAC) units. These Data Centers use perforated tiles to allow cold air to leave from the plenum to enter the main area near the servers. Once this air passes through the server units, heated air is then returned to the CRAC unit for cooling. CRAC units have humidifiers to produce steam for running fans for cooling. Hence, they also ensure the required humidity conditions.

However, as room dimensions increased in modern Data Centers, legacy cooling systems become inadequate. These Data Centers need additional cooling systems besides the CRAC unit. Here is a list of techniques and methods used for modern Data Center cooling.

Server Cooling: Heat generated by the servers are absorbed and drawn away using a combination of fans, heat sinks, pipes within ITE (Information Technology Equipment) units.1 Sometimes, a server immersion cooling system may also be used for enhanced heat transfer.

Space Cooling: The overall heat generated within a Data Center is also transferred to air and then into a liquid form using the CRAC unit.

Heat Rejection: Heat rejection is an integral part of the overall cooling process. The heat taken from the server is displaced using CRAC units, CRAH (Computer Room Air Handler) units, split systems, airside economization, direct evaporative cooling and indirect evaporative cooling systems. An economizing cooling system turns off the refrigerant cycle drawing air from outside into the Data Center so that the inside air can get mixed with the outside air to create a balance. Evaporated water is used by these systems to supplement this process by absorbing energy into chilled water and then lowering the bulb temperature to match the temperature of the air.

Containments: Hot and cold aisle containment use air handlers to contain cool or hot air and let the remaining air out. A hot containment would contain hot exhaust air and let cooler air out while cold containment would do vice versa.3 Many new Data Centers use hot aisle containment which is considered as a more flexible cooling solution as it can meet the demands of increased density of systems.

Closed-Couple cooling: Closed-Couple Cooling or CCC includes above-rack, in-rack or rear-door heat exchanger systems. It involves bringing the cooling system closer to the server racks itself for enhanced heat-exchange.2 This technology is very effective as well as flexible with long-term provisions but requires significant investments.

Conclusion

Companies can choose a cooling system based on the cooling needs, infrastructure density, uptime needs, space factors, and cost factors. The choice of the right cooling system becomes critical when the Data Center needs to have high uptime and avoid any downtime due to energy issues.

Sify offers state of the art Data Centers to ensure the highest levels of availability, security, and connectivity for your IT infra. Our Data Centers are strategically located in different seismic zones across India, with highly redundant power and cooling systems that meet and even exceed the industry’s highest standards.

Five ways cloud is transforming the business world

Organizations around the globe are inclining towards the cloud technology and cloud platforms for enhanced data management and security, cost-efficient services, and of course, the ability to access distributed computing and storage facilities from anywhere, anytime.

In a recent study it was found that nearly 41% of the surveyed respondents showed interest in investing in and increasing the spending on cloud technologies, with over 51% large and medium companies planning to expand their budgets for cloud tech. As a result of this rapidly increasing interest and demand for cloud-based services, cloud computing service providers and cloud computing companies are on the rise.

But, why is the business world rapidly shifting to the cloud computing?

Cloud computing can bring about major positive transitions for businesses as it offers grounds for innovation coupled with organizational efficiency. Let’s look at the five ways in which cloud computing is transforming the business world.

1. Enhanced Operations

One of the best features of cloud computing solutions is that they can scale as a company grows. Cloud computing service providers allow companies (big or small) to move a part or all of their operations from a local network to the cloud platform, thereby making it easier for them to access a host of facilities such as data storage, data processing, and much more. Usually, cloud computing service providers have a strong and dedicated support team that can assist users through real-time communication.

Another major benefit of using a third-party service is that the responsibility of data and system management and the associated risks falls under the purview of the service provider. So, one can take advantage of the cloud services without having to worry about the risks.

2. Cost Reduction

Cloud computing services are highly cost-effective. Companies and businesses using the services of third-party cloud computing providers need not bear extra expenses in setting up the required infrastructure or hire additional in-house IT professionals for installing, managing, and upgrading the systems. As mentioned above, all these needs are taken care of by the cloud computing providers. Also, small companies can take advantage of the same tools and resources that are used by large corporations, without having to incur additional IT overhead. This means cloud computing services can reduce IT costs and increase the operating capital which can be steered towards improving other core areas of the business. With increased productivity, efficiency, flexibility and reduced costs, businesses can become more innovative and agile in their operations.

3. Fortified Security And Storage

Unlike the past, cloud computing service providers are now extremely cautious about the security and safety of their users. All the sensitive user data, files, and other important documents are stored across a distributed network. Since the data is never stored in one single physical device and the enforcement of encrypted passwords and restricted user access, the safety and security of the user data are enhanced. Steps are taken to further protect the data by incorporating firewall and anti-malware software within the cloud infrastructure.

Furthermore, cloud computing service providers allow businesses to leverage the best quality hardware for faster data access and exchange. This further boosts the operational efficiency, speed, and productivity.

4. Improved Flexibility

With cloud services, employees can access the same resources while working remotely that they could access while working from the office. Thanks to cloud technology, employees can now work within the comfort zone of their homes and get work done seamlessly. Mobile devices make this even more convenient by allowing employees to enjoy the flexibility of working at their own pace while also facilitating real-time communication between them and the users. Cloud companies can, thus, deliver better and more efficient services by investing in a band of dedicated remote employees instead of maintaining a full-house of on-site employees. This fast increasing mobile workforce delivering quality cloud solutions is a big reason why businesses today are making the transition to cloud computing providers.

5. Better Customer Support

Today, cloud computing providers have upgraded their game by offering an array of support options for businesses to choose from. Apart from the conventional telephone service, businesses can now opt for AI-powered chatbots that can interact with customers like a real human being. As most cloud computing providers offer impressive bandwidth, it facilitates improved communications which allow a firm’s customer support team to handle customers requests swiftly. The speedy and prompt delivery of support services will ensure that the customers don’t have to wait for hours for their queries/requests to be addressed. All this together leads to a richer customer support experience.

The end result? Happy customers who endorse the brand to a larger network.

Cloud computing is a versatile platform that offers an extensive variety of solutions to the common challenges and hurdles that businesses face in their day-to-day functioning. And that is precisely why cloud computing solutions and services are increasingly penetrating the business world by the minute and transforming it for the better.

Opt for dedicated private cloud infrastructure services for your mission-critical workloads.

To learn more about SIFY’S GOINFINIT PRIVATE – an enterprise-grade, fully integrated private cloud IT platform with specific controls, compliance and IT architecture available in a flexible consumption model.

Five major challenges during Data Center migration

Data Center migration is essential for companies looking to meet the growing demands of the IT/data services. However essential, this process comes with its own set of challenges. Thus, it would be wise to tread carefully and assess both the core necessities and challenges that usually accompany Data Center migration.

Data Center migration involves moving a part or the entire IT operation of a company from the existing Data Center to another, either physically or virtually (shared Data Centers via the cloud). Here are the five basic challenges, or rather considerations to make for a successful Data Center migration:

1. Service Provider Credentials

Before rushing into a collaboration with a Data Center migration provider, it is important to assess the service providers business; what is their track record of providing Data Center services as well as maintaining colocation Data Centers; do they operate by purchasing the Data Center services from multiple providers; what are the terms and conditions. Usually, if a provider leases services instead of having their own facilities, it can be safely said that it will not offer stability or efficiency in delivering the pivotal IT needs for a digital enterprise.

Hence, doing a little research ahead of the Data Center migration about the provider helps assess the quality of services of the provider.

2. Customer Service

An excellent customer service is demanded of any service, and Data Center migration is no exception. Providers that have created a good track record of their services in the market have done so by not only delivering seamless and actionable IT/data solutions but also by catering to the minor troubles and issues faced by their customers. The services of good providers are accompanied by on-demand expert assistance with less wait time. Also, one has to consider whether or not a prospective provider can meet all the expectations and business demands as and when necessary. Thus, companies should always try to dig a little deeper to gain knowledge about the customer service of a provider before choosing one for Data Center migration.

3. Data Center Location

The location of a service provider’s Data Center is a major factor for the future operations of a company. It may be so that a provider promises to deliver all the core needs of a company today, but in the future, they may falter from that promise. For instance, if a provider takes over another Data Center provider’s location, there are chances that the Data Center facilities may fall within close proximity of each other. As a result, the service provider may feel it’s unnecessary to have multiple Data Centers situated closely and may shut down some chosen locations. This can become a huge inconvenience if a company’s location is in the phase out location. Thus, to avoid such unsavory situations, it’s best to choose a provider with their own facilities located strategically.

4. Service Bundling

Customers can hugely benefit from Data Center providers that provide access to facility resources and network connectivity to the users. However, not all providers are able to deliver this. Providers that do not own their own facilities, locations, and operations, often collaborate with third-party providers or platforms, which may cease to exist in the future. And when that happens, it is sure to affect a company’s operations. And it might end up in a situation where the customers will have to make adjustments with two separate providers that may no longer be able to offer seamless and efficient services.

5. Reliability

Finally, one of the most important factors to consider for Data Center migration is the reliability of the service provider. To determine this, one has to analyze the security systems, HVAC features, OPEX, availability and uptime, and other such measures. It would be wise to choose a provider with a history of minimal number of service outages, since a service outage can cost you dearly. Also, while choosing a provider, one should check if it is a certified Data Center that offers stable, cost-efficient, and state-of-the-art services.

These are the five core areas where companies can face numerous challenges while opting for a Data Center migration. However, they can be easily overcome if addressed with a little caution and risk-assessment approach.

Outsource complex and time-consuming Data Center migration to Sify.

To learn more about SIFY DATA CENTER MIGRATION SERVICES and how we can be your best choice to carefully plan and perfectly execute your Data Center migration project.

Keys to Successful Data Center Operations

For one reason or another, every business requires a Data Center at some point. There is an ever-increasing demand for data everywhere, and as a result of this, companies require more and more processing power and storage space.

There isn’t a specific kind of company that will require a Data Center, but some are more likely to require it than others. For instance, any kind of business which uses, processes, or stores a lot of data will definitely require a Data Center. These businesses can be educational institutions – like schools or colleges, telecom companies, or even social networking services. Without constant access to data, these companies can fall short on providing essential services. This can lead to loss of customer satisfaction as well as revenue.

Earlier, businesses only had the option of going for a physical Data Center, where data would be stored across several devices in a single facility. At such a time, to ensure smooth operations of the Data Center, it was enough simply to have an efficient cooling strategy in which power was judiciously used.

With the rise in technology, however, cloud servers are now available on which data can be stored remotely. As a result of this, the future of Data Centers is one in which all devices are connected across several different networks. This requires more Data Center elements than existed previously. Moreover, the metrics on which the efficiency of a Data Center is judged have also evolved.

There are now 4 factors according to which the success of a Data Center can be determined. They are:

- Infrastructure

- Optimization

- Sizing

- Sustainability

Infrastructure

A lot of businesses forget the fact that infrastructure can directly impact the performance of a network. Maximizing network performance can be achieved by paying attention to three parts of the complete infrastructure – the first being structured cabling, the second being racks and cabinets, and the third being cable management.

To take just one example, scalable as well as feasible rack and cabinet solutions are an effective way of realizing this. Not only can they accommodate greater weight thresholds, but they also have movable rails and broader vertical managers. This provides options for increased cable support, airflow, and protection.

Optimization

The faster a Data Centers expands, the quicker it grows in terms of size and complexity. This requires significantly quick deployment time. A Data Center needs to be updated regularly to support the growing needs of a business. Purchasing infrastructure solutions which can optimize time will be a wise decision in this regard – it will help in manifold ways by making it easier to move infrastructure, or to make additions or subtractions to an already existing setup.

A modular solution can become the foundation for scalable building infrastructure and save time as well! Modular racks and cabinets can be put together quickly, and also have adjustable rails and greater weight thresholds. Thus, they can accommodate new equipment very easily. Such a modular solution can support future changes in the network as well, without increasing the scope of disruption.

Sizing

Earlier, the one key factor in assessing the efficiency of a Data Center in terms of size was to see how fast it would grow. Accordingly, the infrastructure supporting it would also grow. While sounding simple in theory, such a principled decision – that expansion will happen without any forethought – is detrimental both in terms of capital as well as energy.

The truth is that space is a premium everywhere – so why shouldn’t this be the case while considering a Data Center? An infrastructure system should always be built for optimization, so that the process of scaling is straightforward and not beset by liabilities of any kind. One simple way to achieve this is to adopt the rack as the basic building block for a Data Center.

Sustainability

Sustainability is not a singular concept. While it is often associated with not destroying natural resources, it can also be tailored to achieve the opposite effect – to conserve them. A myth is often propagated regarding natural resources – that it is more expensive to streamline processes to be sustainable. The truth is that it costs the same and, moreover, it has a lot of benefits as well.

When sustainable manufacturers design solutions which lower the overall impact your Data Center will have on the environment, it translates into more flexibility in terms of design, shorter installation times, as well as reduction in material waste on site – and much, much more. The key factor is energy efficiency, and therefore all other processes are streamlined to fit this metric.

It is no longer enough simply to consider effective cooling and energy solutions as the be-all and end-all of Data Center operations. Data Centers play a crucial role in terms of a business’ overall success, and trying simply to maximize efficiency within a Data Center is a short-sighted target. Other forms of efficiency can equip the Data Center to be changed later at reduced costs, thus making it more capital-effective.

Ultimately, the goal should be to make the Data Center have efficient infrastructure and optimized modular solutions. In addition, it should be scalable in terms of size, without incurring liabilities, and should also be sustainable, as it will help with all of the above.

Focus on your core business and outsource the complexities of Data Center operations to Sify

To know more about Sify’s Colocation Managed Services that will leave your core IT team free to concentrate on more strategic initiatives that are mission-critical to your business…

How Data Center works (and how they’re changing)

A Data Center is usually a physical location in which enterprises store their data as well as other applications crucial to the functioning of their organization. Most often these Data Centers store a majority of the IT equipment – this includes routers, servers, networking switches, storage subsystems, firewalls, and any extraneous equipment which is employed. A Data Center typically also includes appropriate infrastructure which facilitates storage of this order; this often includes electrical switching, backup generators, ventilation and other cooling systems, uninterruptible power supplies, and more. This obviously translates into a physical space in which these provisions can be stored and which is also sufficiently secure.

But while Data Centers are often thought of as occupying only one physical location, in reality they can also be dispersed over several physical locations or be based on a cloud hosting service, in which case their physical location becomes all but negligible. Data Centers too, much like any technology, are going through constant innovation and development. As a result of this, there is no one rigid definition of what a Data Center is, no all-encompassing way to imagine what they are in theory and what they should look like on the ground.

A lot of businesses these days operate from multiple locations at the same time or have remote operations set up. To meet the needs of these businesses, their Data Centers will have to grow and learn with them – the reliance is not so much on physical locations anymore as it is on remotely accessible servers and cloud-based networks. Because the businesses themselves are distributed and ever-changing, the need of the hour is for Data Centers to be the same: scalable as well as open to movement.

And so, new key technologies are being developed to make sure that Data Centers can cater to the requirements of a digital enterprise. These technologies include –

- Public Clouds

- Hyper converged infrastructure

- GPU Computing

- Micro segmentation

- Non-volatile memory express

Public Clouds

Businesses have always had the option of building a Data Center of their own, to do which they could either use a managed service partner or a hosting vendor. While this shifted the ownership as well as the economic burden of running a Data Center entirely, it couldn’t have as much of a drastic effect to due to the time it took to manage these processes. With the rise of cloud-based Data Centers, businesses now have the option of having a virtual Data Center in the cloud without the waiting time or the inconvenience of having to physically reach a location.

Hyper converged infrastructure

What hyper converged infrastructure (HCI) does is simple: it takes out the effort involved in deploying appliances. Impressively, it does so without disrupting the already ongoing processes, beginning from the level of the servers, all the way to IT operations. This appliance provided by HCI is easy to deploy and is based on commodity hardware which can scale simply by adding more nodes. While early uses that HCI found revolved around desktop virtualization, recently it has grown to being helpful in other business applications involving databases as well as unified communications.

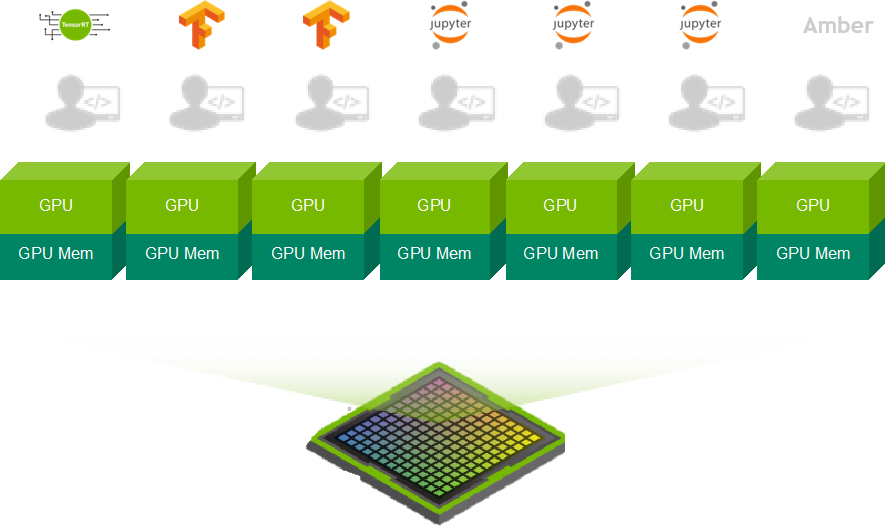

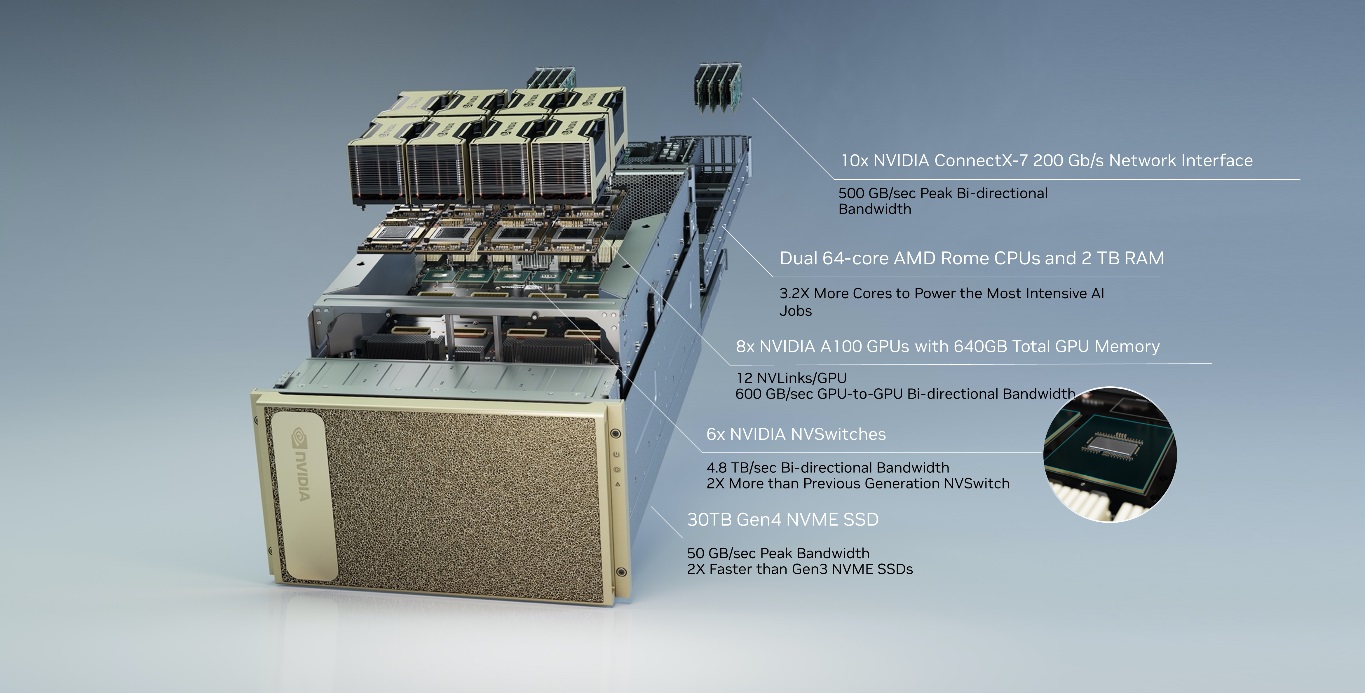

GPU Computing

While most computing has so far been done using Central Processing Units (CPUs), the expansive fields of machine learning and IoT have placed a new responsibility on Graphics Processing Units (GPUs). GPUs were originally used only to play graphics-intensive games, but are now being used for other purposes as well. They operate fundamentally differently from CPUs as they can process several different threads in tandem, and this makes them ideal for a new generation of Data Centers.

Micro segmentation

Micro segmentation is a method through which secure zones are created in a Data Center, curtailing any problems which may arise through any intrusive traffic which bypasses firewalls or. It is done primarily through and in software, so it doesn’t take long to implement. This happens because all the resources in one place can be isolated from each other in such a way that if a breach does happen, the damage is immediately mitigated. Micro segmentation is typically done in software, making it very agile.

Non-volatile memory express

The breakneck speed at which everything is getting digitized these days is a definitive indication that data needs to move faster as well! While older storage protocols like Advanced Technology Attachment (ATA) and the small computer system interface (SCSI) have been been impacting technology since time immemorial, a new technology called Non-volatile memory express (NVMe) is threatening their presence. As a storage protocol, NVMe can accelerate the rate at which information is transferred between solid state drives and any corresponding systems. In doing so, they greatly improve data transfer rates.

The future is here!

It is no secret that Data Centers are an essential part of the success of all businesses, regardless of their size or their industry. And this is only going to play a more and more important factor as time progresses. A radical technological shift is currently underway: it is bound to change the way a Data Center is conceptualized as well as actualized. What remains to be seen is which of these technologies will take center stage in the years to come.

Reliable and affordable connectivity to leverage your Data Center and Cloud investments

To know more about Sify’s Hyper Cloud Connected Data Centers – a Cloud Cover connects 45 Data Centers, 6 belonging to Sify and 39 other Data Centers, on a high-speed network…

Is your network infrastructure built for the future? Check today!

Any network infrastructure forms a section of a much larger IT infrastructure of any organization. Typically, a network infrastructure is likely to contain the following:

- Networking devices: such as LAN Cards, modems, cables, and routers

- Networking software: such as firewalls, device drivers, and security applications

- Networking services: such as DSL, satellite, and wireless services.

The continuous improvements in the technologies underlying the network infrastructure have made sure that as a business owner, you keep your network infrastructure up to date with the latest trends. It is easy to see why network infrastructure plays such an important role in enterprise management and functioning. Big businesses must keep thousands of devices connected to maintain consistency across the whole enterprise. Having a strong backbone helps. This is where a good network infrastructure comes in.

Considering the innovations and advancements in this sector, let’s look at some of the important trends which will help you find out if your infrastructure is up-to-date, and if not, where work is required:

Cloud networking systems

First and foremost, the reliance of businesses with cloud systems is increasing over the years. So, to start with, you should make sure that you are using a cloud-based system for your network infrastructure. With both public and private cloud options available, big enterprises are looking to shift their operations onto the cloud for fast, reliable, and secure operations. Hybrid clouds, which mix the good bits of both public and private cloud networking systems, have also emerged as viable network infrastructure solutions for enterprises.

Security as the topmost priority

Enterprises are giving utmost importance to security, owing to the fact that data breaches and hacks have been on the rise. With new innovations in tech come new ways of hacking networks. To protect against these breaches, organizations are now beyond the traditional firewalls and security software, and are turning up the heat on any malicious hacker looking to take down their network. Security has now become by far the biggest motivator in improving network security these days.

A secure network infrastructure requires you to have properly configured routers that can help protect against a DDoS (Distributed Denial of Service) attack. Further, a keen eye must be kept on the Operating systems as they form the foundation to any layered security. If privileges within an OS are jeopardized, the network security can be compromised at large.

An increased importance to analytics

For a long time, network analytics tools have been pulling data from the network, but it seems that the tides are changing. Enterprises now use their own big data analytics tools to generate information on trends and are pushing data from the network infrastructure. Companies like Cisco and Juniper are providing telemetry from their networking infrastructure to local analytics systems, with Juniper using OpenNTI to analyze it. Is your network infrastructure in-sync with your data analytics tools? If no – you must consider doing that, you don’t want to be left behind while the world progresses.

SD-WAN

Software-defined wide area networks continue to be a hot trend in network infrastructure innovations. Although the concept of SDN hasn’t been as successful as first imagined, SD-WAN seems to be the exception to the rule. Perhaps it is the only good thing that’s come out of the SDN concept. SD-WAN is gaining wide acceptance due to the fact that it offers good riddance from complex routing policies, and allows us to go beyond the capabilities of normal routing policies. There are numerous vendors offering SD-WAN models for your infrastructure, and the cost for it varies from vendor to vendor. Ease of use is what drives enterprises towards SD-WAN.

If an enterprise incorporates the above-discussed network trends, it is likely that the Infrastructure is not only ready for today, but is also future-proof. To make sure your network infrastructure is always up-to-date, you need to keep up with the latest news, innovations, and trend changes in networking.

Here’s why your enterprise should have a disaster recovery system

Disaster can strike anytime. Whether they are natural or inflicted by man, disasters have small chances of being predicted accurately. Whatever be the case, enduring and recovering from these disasters can be a pretty rough job for your enterprise.

Disasters can potentially wipe out the entire company, with the enterprise’s data, employees, and infrastructure all at risk. From small equipment failure to cyber-attacks, recovery from disasters depends upon the nature of the events themselves. Disaster recovery is an area of security management and planning that aims to protect the company’s assets from the aftermath of negative events. While it is incredibly tough to completely recover from disasters within a short span of time, it is certainly advisable to have disaster recovery systems in place. In the time of need, these DR plans can present an effective, if not quick, method of recovery from negative events.

The importance of a Disaster Recovery System

Prevention is better than cure, but sometimes, we must make do with the latter. We cannot prevent every attack that can potentially cripple our enterprise, but we must make sure that we have the resources to recover. The need for disaster recovery systems can arise from various situations, some being discussed below.

- The unpredictability of nature

It is estimated that about 4 out of every 5 companies, which experience interruptions in operations of 5 days or more, go out of business. The wrath of Mother Nature is certainly a contributing factor to this statistic. One can seldom predict when Mother Nature is about to strike. Earthquakes, tsunamis, tornadoes and hurricanes can cause irreparable damage to enterprises and businesses. Stopping these disasters is impossible; however, not having a disaster recovery plan in place is inexcusable. We cannot predict how much damage nature can cause to our company; hence, it is of prime importance that a disaster recovery system be in place to prevent your enterprise from falling prey to the aforementioned statistic.

- Technology failures can occur anytime

These days, customers want access to data and services every second of the day, every day of the year. Due to the immense pressure on your enterprise systems, it is possible that they may crumble. Machine and hardware failure can seldom be predicted, but it is certainly possible to resume normal work with minimum disruption and slowdown. The only way to do this is either by eliminating single failure points from your network, which can be extremely expensive, or by having suitable recovery systems in place. Having recovery plans, in hindsight, are perhaps the best bet for you to keep your enterprise going at full speed.

- Human error

“Perfection is not attainable, but if we chase perfection we can catch excellence.”

Humans aren’t perfect, and are bound to make mistakes. The nature of these mistakes cannot be predicted. In order to survive all these unpredictable phases, you need to have an effective disaster recovery plan in place.

Enough about the reasons behind backup plans.

Let’s look at what a good disaster recovery system should include.

Your disaster recovery system must include…

Each and everything that could potentially save you from having to start up your enterprise from scratch. Methods to recover from every potential interruption, from technical to natural, should be there in your DRP. These include analyses of all threats, data backup facilities, employee and customer protection, among other essential things.

With each passing day, you must also consider any additions or updates to your DR systems. Technology is improving day by day, and it is possible that what you’re currently trying to achieve may be made easier and quicker by the use of newer tech. Also identifying what’s most important, and where to innovate, is a crucial aspect of DR planning.

In order to ensure that your DR system is running at full speed, your enterprise can hold mock disaster recovery drills. This will help identify weak points in the system, and make people accustomed to the processes involved. It will make reacting to the actual disaster much more efficient and quick.

DRaaS

Disaster Recovery as a service has made it easier for enterprises to have disaster recovery systems ready. Various providers have reduced the load on entrepreneurs when it comes to preparing for disasters, by offering them custom made effective disaster recovery systems. Perhaps the most important thing one should do now is not wait. If your enterprise has a disaster recovery system in place, thoroughly test it for bottlenecks, if it doesn’t, well, get one!

Ensure business continuity with Sify’s disaster recovery as a service.

To know more about GoInfinit Recover

– Sify’s disaster recovery solution with no change to your IT setup…

How Cloud Data Centers Differ from Traditional Data Centers

Every organization requires a Data Center, irrespective of their size or industry. A Data Center is traditionally a physical facility which companies use to store their information as well as other applications which are integral to their functioning. And while a Data Center is thought to be one thing, in reality, it is often composed of technical equipment depending on what requires to be stored – it can range from routers and security devices to storage systems and application delivery controllers. To keep all the hardware and software updated and running, a Data Center also requires a significant amount of infrastructure. These facilities can include ventilation and cooling systems, uninterruptible power supplies, backup generators, and more.

A cloud Data Center is significantly different from a traditional Data Center; there is nothing similar between these two computing systems other than the fact that they both store data. A cloud Data Center is not physically located in a particular organization’s office – it’s all online! When your data is stored on cloud servers, it automatically gets fragmented and duplicated across various locations for secure storage. In case there are any failures, your cloud services provider will make sure that there is a backup of your backup as well!

So how do these different modes of storage stack up against each other? Let’s compare them across four different metrics: Cost, Accessibility, Security and Scalability.

Cost

With a traditional Data Center, you will have to make various purchases, including the server hardware and the networking hardware. Not only is this a disadvantage in itself, you will also have to replace this hardware as it ages and gets outdated. Moreover, in addition to the cost of purchasing equipment, you will also need to hire staff to oversee its operations.

When you host your data on cloud servers, you are essentially using someone else’s hardware and infrastructure, so it saves up a lot of financial resources which might have been used up while setting up a traditional Data Center. In addition, it takes care of various miscellaneous factors relating to maintenance, thus helping you optimize your resources better.

Accessibility

A traditional Data Center allows you flexibility in terms of the equipment you choose, so you know exactly what software and hardware you are using. This facilitates later customizations since there is nobody else in the equation and you can make changes as you require.

With cloud hosting, accessibility may become an issue. If at any point you don’t have an Internet connection, then your remote data will become inaccessible, which might be a problem for some. However, realistically speaking, such instances of no Internet connectivity may be very few and far between, so this aspect shouldn’t be too much of a problem. Moreover, you might have to contact your cloud services provider if there’s a problem at the backend – but this too shouldn’t take very long to get resolved.

Security

Traditional Data Centers have to be protected the traditional way: you will have to hire security staff to ensure that your data is safe. An advantage here is that you will have total control over your data and equipment, which makes it safer to an extent. Only trusted people will be able to access your system.

Cloud hosting can, at least in theory, be more risky – because anyone with an internet connection can hack into your data. In reality, however, most cloud service providers leave no stone unturned to ensure the safety of your data. They employ experienced staff members to ascertain that all the required security measures are in place so that your data is always in safe hands.

Scalability

Building your own infrastructure from scratch takes a lot of input in both financial as well as human terms. Among other things, you will have to oversee your own maintenance as well as administration, and for this reason it takes a long time to get off the ground. Setting up a traditional Data Center is a costly affair. Further, If you wish to scale up your Data Center, you might need to shell out extra money, albeit unwillingly.

With cloud hosting, however, there are no upfront costs in terms of purchasing equipment, and this leads to savings which can later be used to scale up. Cloud service providers have many flexible plans to suit your requirements, and you can buy more storage as and when you are ready for it. You can also reduce the amount of storage you have, if that’s your requirement.

Can’t decide which one to go for?

There is no universal right choice. Your choice should depend on what your business is prepared to take on, what your exact budget is, and whether or not you have an IT staff available to handle a physical Data Center.

Consider a dedicated private cloud infrastructure services for your mission-critical workloads

To know more about GoInfinit Private

– Sify’s private cloud storage service that will be ready for application deployment in as little as 10 weeks…

What Are the Trending Research Areas in Cloud Computing Security?

Cloud computing is one of the hottest trends. Most technological solutions are now on cloud and the ones remaining are vying to be on cloud. Due to its exceptional benefits, it has magnetized the IT leaders and entrepreneurs at all levels.

What is Cloud Computing?

Cloud Computing is when many computers are linked through a real-time communication network. It basically refers to a network of remote servers that are hosted in Data Center, which further can be accessed via internet from any browser. Hence, it becomes easy to store, manage, and process data as compared to a local server or personal computer.

What is Cloud Networking?

The access to the networking resources from a centralized third-party provider using Wide Area Network (WAN) is termed as Cloud Networking. It refers to a concept where the unified cloud resources are accessible for customers and clients. In this concept, not only the cloud resources but also the network can be shared. With Cloud Networking, several management functions ensure that there are lesser devices required to manage the network.

When data began to move to cloud, security became a major debate, but cloud networking and cloud computing security has come a long way with better IAM and other data protection procedures.

Cloud networking and cloud computing security revolves around three things-

- Safeguarding user accounts in the cloud

- Protecting the data in the cloud

- And, then the third aspect is application security.

Trending Research Areas in Cloud Computing Security

Following are the trending research areas in the Cloud Computing Security:

- Virtualization: Cloud computing itself is based on the concept of virtualization. In this process, virtual version of a server, network or storage is created, rather than the real one. Hardware virtualization refers to the virtual machines that can act like a computer with an operating system. Hardware virtualization is of two types: Full Virtualization and Para-Virtualization.

- Encryption: It is the process of protecting data by sending it in some other form. Cloud computing uses advanced encryption algorithms in order to maintain the privacy of your data. Crypto-shedding is another measure in which the keys are deleted when there is no requirement of using the data. There are two types of encryption used in cloud computing security including Fully Homomorphic Encryption and Searchable Encryption.

- Denial of Service: It is a type of attack in which an intruder can make the resources of the users unavailable by disrupting the services of the internet. The intruder makes sure that the system gets overloaded by sundry requests and also blocks the genuine incoming requests. Application layer attack and Distributed DoS attack are some of its types.

- DDoS Attacks: It stands for Distribution Denial of Service. It is a type of Denial of Service attack in which hostile traffic comes from various devices. Hence, it becomes difficult to differentiate between the malicious traffic and the genuine one. Application layer DDoS attack is another type of DDoS attack in which the attacker targets the application layer of the OSI model.

- Cloud Security Dimensions: Software called Cloud Access Security Brokers (CASB) in between the cloud applications and cloud users, monitors all the policies related to cloud security and also enforces the cloud security policies.

- Data Security: The Encryption method is used in protecting and maintaining the privacy of the data because security in the cloud-based services has always been the focal point. Due to some vulnerabilities and loopholes, data might get exposed to the public cloud.

- Data Separation: An important aspect of data separation is the geolocation. Organizations should make sure that the geolocation for data storage must be a trusted one. Geolocation and tenancy are the major factors in data separation.

Cloud is one of the topics with no limit at all. With its help, you can perform any kind of project in order to enhance the performance in speed and magnify the security algorithm so as to prevent the files from being hacked.

Sify allows enterprises to store and process data on a variety of Cloud options, making data-access mechanisms more efficient and reliable.